[without library] MNIST Digits Classification using Gaussian Naive Bayes

[without library] MNIST Digits Classification using Gaussian Naive Bayes

Based on - [2010] Generative and Discriminative Classifiers : Naive Bayes and Logistic Regression - Tom Mitchell

Introduction

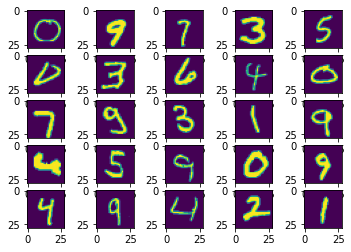

This notebook implements Gaussian Naive Bayes. It performs multi-class classification on MNIST Digits dataset consisting of images of size 28 x 28 = 784 attributes and belonging to three classes. There are 70,000 training data points (images) that are divided into 60,000 training and 10,000 test data points. The prediction accuracy is 64%.

Resources:

- CMU Qatar Lecture Notes - Naive Bayes - Gianni A. Di Caro

- CMU Machine Learning 10-701 - Pradeep Ravikumar

- CMU Machine Learning 10-701 - Tom Mitchell

- How is Naive Bayes a Linear Classifier? - stats.stackexchange

- Naive Bayes classifier - Wikipedia

Towards the end, I have included a sidebar on the comparison of Naive Bayes and Logistic Regression.

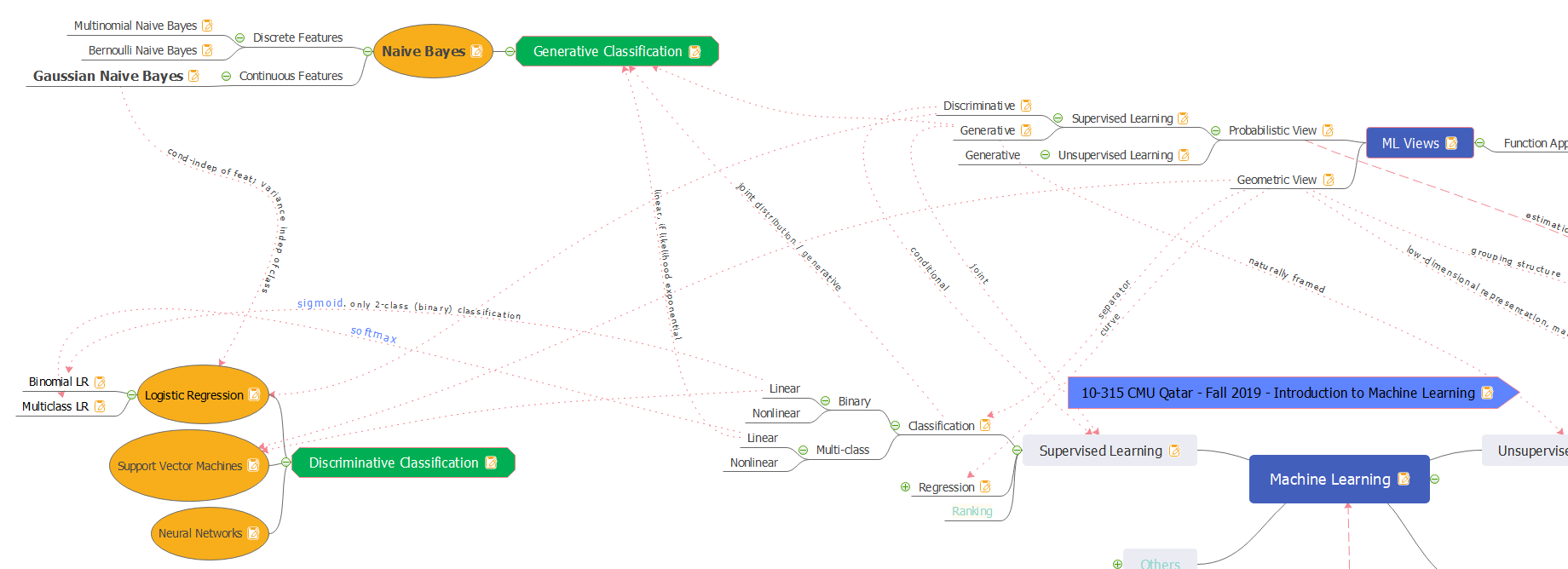

Taxonomy and Notes

Probabilistic, Generative

Naive Bayes is a probabilistic classifier. It learns the underlying joint distribution P(X, Y) by learning the likelihood distribution P(X\|Y) (a.k.a class-conditonal) and the prior distribution P(Y) (a.k.a class-prior). The distribution learnt can be used to generate data. Hence, it is a generative classifier. Naive bayes does not model the class decision boundaries, but instead models the distribution of the observed data. In Generative Modeling, we learn a full probabilistic model, the joint distribution P(X, Y). We assume a parametric form of the underlying probability distribution, and estimate those parameters from the observed data.

Parametric

Naive Bayes is parametric since the distribution assumed to model the likelihood (say, gaussian, multinomial, or bernoulli) have parameters. Gaussian distribution has parameters for mean and standard deviation.

Linear

In general, the Naive Bayes classifier is not linear. But, it becomes linear, if the likelihood is exponential. This is because the log-likelihood becomes linear in the log-space. Gaussian, Multinomial, or Bernoulli distributions are all from the exponential family of distributions, so Naive Bayes can be applied to data exhibiting these distributions. Under the assumption of an exponential distribution, Bernoulli Naive Bayes maps to Binomial Logistic Regression, and Multinomial Naive Bayes maps to Multinomial Logistic Regression.

Event Model

The assumptions on distributions of features are called the event model of the Naive Bayes classifier.

Event Model - Continuous Data (MNIST Digit Recognition, Iris Flower Species Classification)

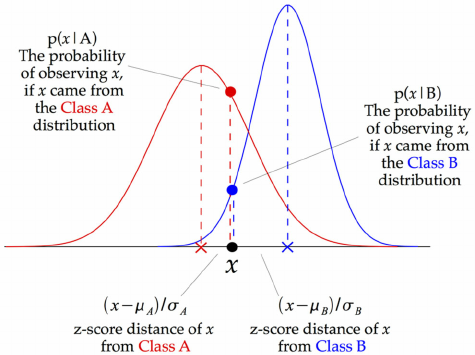

When dealing with continuous data, a typical assumption is that the continuous values associated with each class are distributed according to a normal (or Gaussian) distribution. The Iris Flower Species dataset has attributes that exhibit gaussian distribution.

When assuming Gaussian class-conditionals, if all class-conditional gaussians have the same covariance, then the quadratic terms cancel out and we are left with a linear form. To take an example of MNIST dataset, if we asume that the variance across digits is the same, then we have a linear model.

The class prior distribution has to be a discrete distribution (say, multinoulli) that distributes the probability of a data point belonging to class among K possible classes.

Note: Sometimes the distribution of class-conditional marginal densities is far from normal. In these cases, kernel density estimation can be used for a more realistic estimate of the marginal densities of each class.

Event Model - Discrete Data (Text Classification)

For discrete features (document classification), Multinomial and Bernoulli distributions are popular. With a multinomial event model, features represent frequencies of events (say, count of word occurrences). With a bernoulli event model, features represent presence or absence of events (say, presence or absence of words).

Binary and Multiclass

Naive Bayes can be both binary and multiclass.

Summary

In summary, Naive Bayes is:

- Probabilistic

- Generative

- Binary and Multiclass

- Linear

- Parametric

Why Naive?

Naive Bayes makes an assumption that the input attributes are independent of each other. This results in a significant reduction in the number of parameters the model needs to learn. This is because, since each attribute is assumed to be influenced only by the class its data poin belongs to, the model only has P(X_i|Y_k) terms, and no P(X_i|X_j), P(X_i|X_j, X_k), etc terms. This is the reason the model is called ‘Naive’ because it is seldom the case that the input attributes do not influence each other. Still, Naive Bayes has proven to be effective.

Due to the modeling assumptions of attributes being independent, Naive Bayes model introduces a lot more inductive bias as compared to Logistic Regression. This also results in faster convergence of order O(log N) (N is the number of data points). The fast convergence can perhaps be on less accurate estimates.

Algebraically solved Closed-Form

Naive Bayes class-conditional probabilities (maximum likelihood estimation) can be deduced analytically. Also, the class-priors can be deduced using frequentist methods. So, there is a closed-form solution to Naive Bayes. And, hence, we do not need a numerical method like gradient descent.

Imports

import math #to check for nan

import numpy as np

from numpy import mean, std #mean and standard deviation for gaussian probabilities

from scipy.stats import norm #gaussian probabilities

from math import log # to calculate posterior probability

from random import sample #for random sampling of data points

import tensorflow as tf

import matplotlib.pyplot as plt #display images of digits

%matplotlib inline

Data

Read

Load mnist data from Tensorflow Keras library

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.mnist.load_data()

Sanity check for data getting loaded

print([i.shape for i in (x_train, y_train, x_test, y_test)])

print([type(i) for i in (x_train, y_train, x_test, y_test)])

print('classes, num_classes: ', np.unique(y_train), len(np.unique(y_train)))

[(60000, 28, 28), (60000,), (10000, 28, 28), (10000,)]

[<class 'numpy.ndarray'>, <class 'numpy.ndarray'>, <class 'numpy.ndarray'>, <class 'numpy.ndarray'>]

classes, num_classes: [0 1 2 3 4 5 6 7 8 9] 10

visualize

def visualize(rows, cols, x_, l_i):

fig = plt.figure() #create a figure

axes = [] #accumulate axes of subplots we create in the figure

#for i_subplot in range rows*cols

for i_sp in range(rows*cols): #iterate row * col times

axes.append(fig.add_subplot(rows, cols, i_sp + 1)) #create a new subplot and accumulate its axis

plt.imshow(x_[l_i[i_sp]])

m, n = 5, 5

visualize(m, n, x_train, sample(range(x_train.shape[0]), m*n))

Model

Training Algorithm

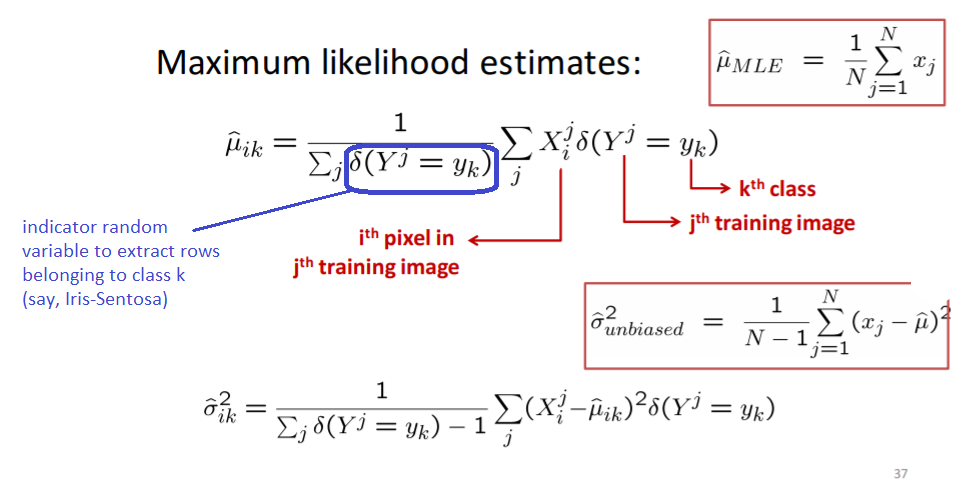

<img src="/ml/assets/images/mnist-gnb/gnb_mean_std.png">'''

return

classes: (list) of unique class names in the dataset,

got from the last column named class_colname.

features: (list) of features (column names) in the dataset.

this excludes the last column which we expect it to have the class labels.

prior: (1-d array) of dim num_classes

(prior probability of a set of features belonging to a class)

mean_std: (3-d array) of dim num_classes x num_features x 2 (2: mean and std)

(mean and standard deviation for all features, given the class)

arguments:

df: (dataframe) with features and class names (should have a 'class' column in addition to the feature columns).

class_colname: (string) provide suitable column name otherwise, using the class_colname argument.

'''

def train_gaussian_nb(X, y):

#number of classes

classes = np.unique(y)

num_classes = len(classes)

#number of data points and features

N, dim_x, dim_y = X.shape[0], X.shape[1], X.shape[2]

#data structures for priors and

# (mean, standard deviation) pairs for each feature and class

# to later calculate likelihood (conditional probability of feature given class)

prior = np.zeros(num_classes, dtype=float)

X_mean = np.empty((num_classes, dim_x, dim_y), dtype=float)

X_std = np.empty((num_classes, dim_x, dim_y), dtype=float)

#for each class...

for cls in range(num_classes):

#use a boolean index list to extract images of this class

bool_idx_list = [True if c==cls else False for c in y]

X_cls = X[bool_idx_list]

#calculate prior probability of data point belonging to class cls

prior[cls] = len(X_cls) / N

#for each dim_x by dim_y, calculate mean and std, across all data points of this class

X_mean[cls] = mean(X_cls, axis=0)

X_std[cls] = std(X_cls, axis=0)

return classes, dim_x, dim_y, prior, X_mean, X_std

Prediction Algorithm

'''

return (integer) the (0-based) index of class to which the document belongs

arguments:

num_classes: (int) number of classes

num_features: (int) number of features

prior: (1-d array) of dim num_classes

(prior probability of a set of features belonging to a class)

mean_std: (3-d array) of dim num_classes x num_features x 2 (2: mean and std)

(mean and standard deviation for all features, given the class)

x: (list) of features

'''

def apply_gaussian_naive_bayes(num_classes, dim_x, dim_y, prior, X_mean, X_std, x):

score = np.zeros((num_classes), dtype=float)

#for each class...

for cls in range(num_classes):

#for this class, add the log-prior probability to the score

score[cls] += log(prior[cls], 10) #log to the base 10

#for each feature, add the log-likelihood to the score

for i_x in range(dim_x):

for i_y in range(dim_y):

#calculate likelihood from the trained mean and standard deviation

mu = X_mean[cls][i_x][i_y]

sigma = X_std[cls][i_x][i_y]

likelihood = norm(mu, sigma).pdf(x[i_x][i_y])

#print(mu, sigma, x[i_x][i_y], likelihood)

#add the log-likelihood to the score

#ValueError exception raised if likelihood is 0 and we take a log.

#To avoid this, we skip adding log_likelihood to the score.

#We may argue that score should be penalized for

# such a great mismatch of pixel intensity for a class,

# but, these pixels are more of a candidate of noise, and can be ignored.

#Also, skip NaN values for likelihood (when mu and sigma are 0 and so is x)

if (math.isnan(likelihood) == True or likelihood == 0):

continue

#print(likelihood)

score[cls] += log(likelihood, 10) #log to the base 10

#return the index of class with the maximum-a-posterior probability

return score.argmax()

Learn

Learn

#train the prior and likelihood on observed data (x_train, y_train)

classes, dim_x, dim_y, prior, X_mean, X_std = train_gaussian_nb(x_train, y_train)

Sanity check the priors

Note that all priors are nearly 10% probable, since we have 10 digits and balanced-class data.

print('Prior Probability P(Y) of the 10 digits:\n', prior)

Prior Probability P(Y) of the 10 digits:

[0.09871667 0.11236667 0.0993 0.10218333 0.09736667 0.09035

0.09863333 0.10441667 0.09751667 0.09915 ]

Sanity check the trained mean and standard deviation

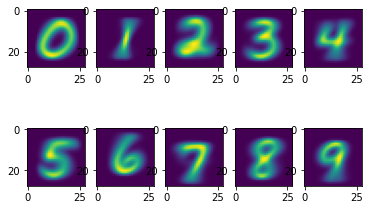

mean

m, n = 2, 5

visualize(m, n, X_mean, [i for i in range(m*n)])

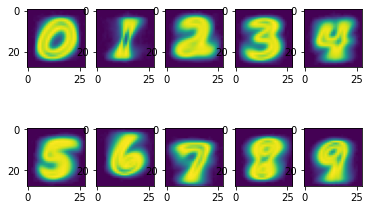

standard deviation

m, n = 2, 5

visualize(m, n, X_std, [i for i in range(m*n)])

Prediction

#iterate over test dataset and count the number of correct and incorrect predictions

count_correct, count_incorrect = 0, 0

#since each prediction is taking ~6 seconds, we can predict 10 digits per minute.

#allowing the classification to run for 10 minutes, we will have 100 predictions.

#so, setting the range as 0..100

l_pred_cls = []

for i in range(50): #len(x_test)):

#actual class

actual_cls = y_test[i]

#predicted class

# input provided as row[:-1].to_list(), means, all columns except last, converted to a list

pred_cls = apply_gaussian_naive_bayes(len(classes), dim_x, dim_y, prior, X_mean, X_std, x_test[i])

l_pred_cls.append(pred_cls)

if classes[pred_cls] == actual_cls:

count_correct += 1

else:

count_incorrect += 1

#print('(predicted, actual):', pred_cls, actual_cls)

C:\Users\jeete\.conda\envs\Python37Env\lib\site-packages\scipy\stats\_distn_infrastructure.py:1760: RuntimeWarning: invalid value encountered in double_scalars

x = np.asarray((x - loc)/scale, dtype=dtyp)

C:\Users\jeete\.conda\envs\Python37Env\lib\site-packages\scipy\stats\_distn_infrastructure.py:1760: RuntimeWarning: divide by zero encountered in double_scalars

x = np.asarray((x - loc)/scale, dtype=dtyp)

Prediction Accuracy

print('Correct: ', count_correct, 'Incorrect: ', count_incorrect)

print('Percentage of correct predictions: ', (count_correct * 100)/(count_correct + count_incorrect))

Correct: 29 Incorrect: 21

Percentage of correct predictions: 58.0

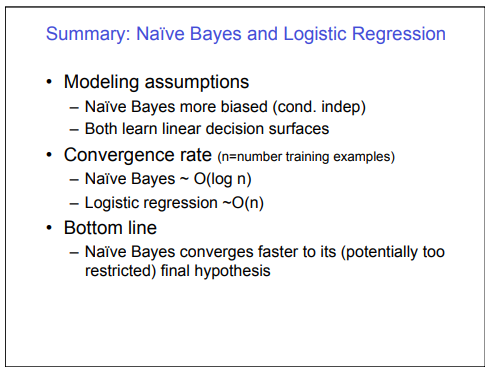

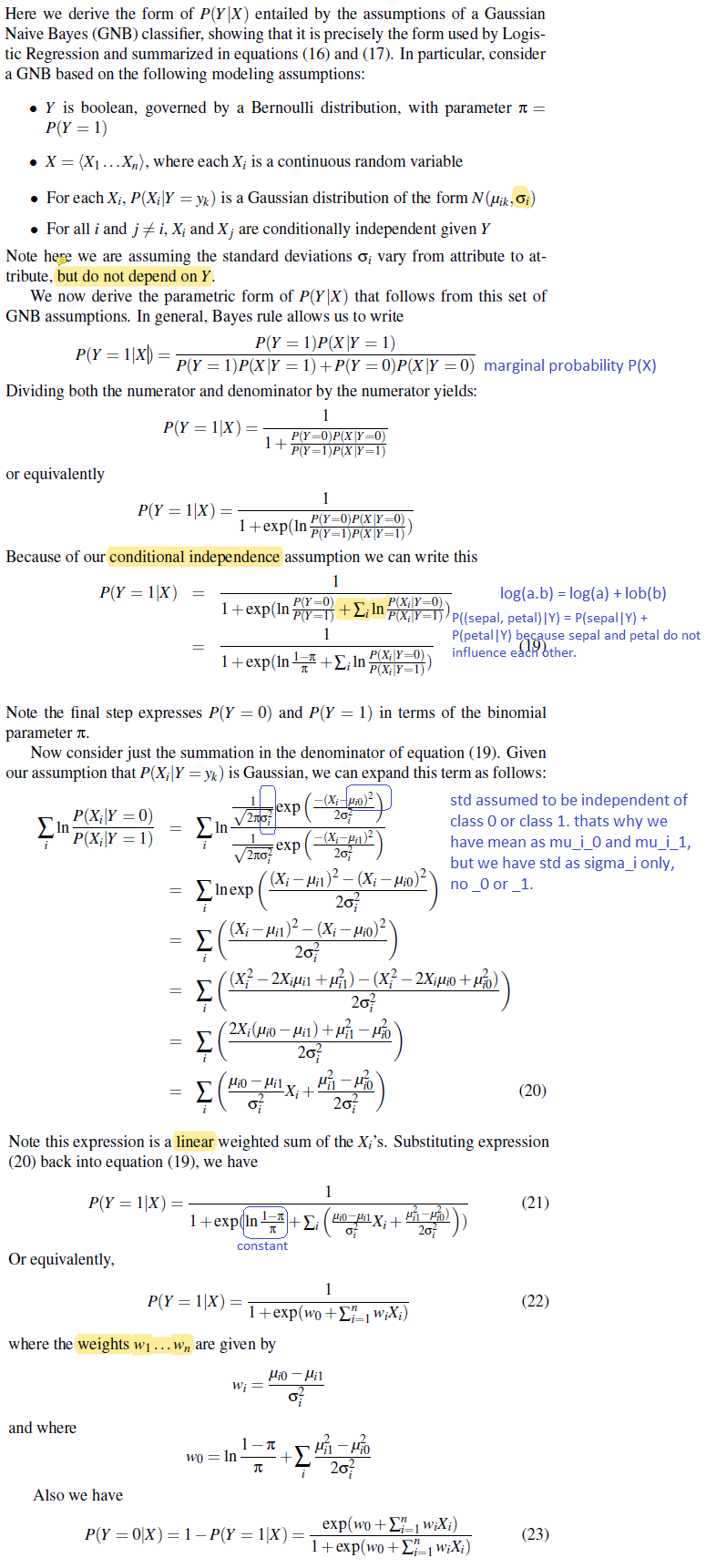

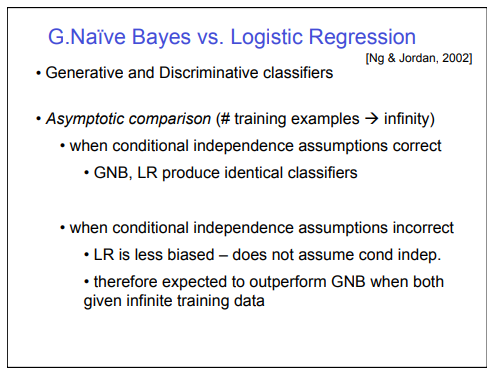

Sidebar - Comparison of Naive Bayes and Logistic Regression

Generative and Discriminative Classifiers : Naive Bayes and Logistic Regression - Tom Mitchell

Same Parametric Form (under attribute independence assumptions)

The parametric form of P(Y|X) used by Logistic Regression is precisely the form implied by the assumptions of a Gaussian Naive Bayes clasifier.

Attribute Independence Assumption; Convergence; Input Data Size

Asymptotic Comparison

Summary